Future Manufacturing Research Grant: Gaze-Collab Ongoing

Research novel interaction and visualization techniques with gaze to facilitate human-human task remote collaboration, funded in-part by National Science Foundation

2023 - ,Associated with: Columbia CGUI Lab, prof. Steven K. Feiner, prof. Barbara Tversky, Ph.D. Jen-Shuo Liu

Background

In future manufacturing, co-workers can collaborate remotely with minimal cognitive effort.

In the description of the FMRG: Multi-Robot project, I explained how we prompt and iterate the Virtual Reality solution to perform teleoperation. The critical shift from 2D UI to XR is that an immersive working environment can help users to perceive the working conditions quickly and intuitively and perform spatial manipulations more efficiently. Among all key factors, a critical one for XR holding such advantage is the considerable reduction to mental/cognitive effort - users can interact with the interfaces with less confusion, expecting a more smooth, natural, and highly efficient collaboration experience. To amplify and advance such key factor, we introduced this research about cueing and pre-cueing techniques with application of cognitive technologies (gaze) in XR, which can potentially enhance the remote collaboration experience.

In the description of the FMRG: Multi-Robot project, I explained how we prompt and iterate the Virtual Reality solution to perform teleoperation. The critical shift from 2D UI to XR is that an immersive working environment can help users to perceive the working conditions quickly and intuitively and perform spatial manipulations more efficiently. Among all key factors, a critical one for XR holding such advantage is the considerable reduction to mental/cognitive effort - users can interact with the interfaces with less confusion, expecting a more smooth, natural, and highly efficient collaboration experience. To amplify and advance such key factor, we introduced this research about cueing and pre-cueing techniques with application of cognitive technologies (gaze) in XR, which can potentially enhance the remote collaboration experience.

Early Works on Cueing and Pre-Cueing by Ph.D. Jen-Shuo Liu

Introduction & Ideation

In a collaborative context, knowing other collaborators' intention and understand their spatial actions are critical to the effectiveness and efficiency. Among techniques, by reviewing existing literature, we found gaze in VR/AR is full of potential to promote the collaboration quality by dynamically displaying the gaze geometry and encouraging users to interact with them. In this continuum of possible research directions, we raised the following fields to explore or potential research questions, such as:

- How to understand the psychological factors behind collaboration?

- What are the challenges in remote collaboration? And how can XR help?

- In a collaborative immersive virtural environment, what are the potential best ways to integrate gaze techniques?

- How dynamic gaze visualization and interaction can help some specific tasks in remote collaboration, such as object selection, verification, etc.?

- How does gaze in XR empower new possibilities for complex tasks collaboration that often involve hand-eye coordinations?

......

- How to understand the psychological factors behind collaboration?

- What are the challenges in remote collaboration? And how can XR help?

- In a collaborative immersive virtural environment, what are the potential best ways to integrate gaze techniques?

- How dynamic gaze visualization and interaction can help some specific tasks in remote collaboration, such as object selection, verification, etc.?

- How does gaze in XR empower new possibilities for complex tasks collaboration that often involve hand-eye coordinations?

......

Since this is an ongoing project, to the respect my and my partners' rights, I may not expose more insights.

Study / Experiments / Implementation

This research project is still in a relatively early pharse, we have not carried out a functionable experiment for data collection and analysis.

Deliverables (Prototype)

We are recently working this project intensively, targeting future conferences/journals such as VR and CHI. As I mentioned, since this is an ongoing project, I cannot expose the details of our future works, please understand this, but I can show some demos of the early prototype built by me with Unity and PhotonNetworking Services.

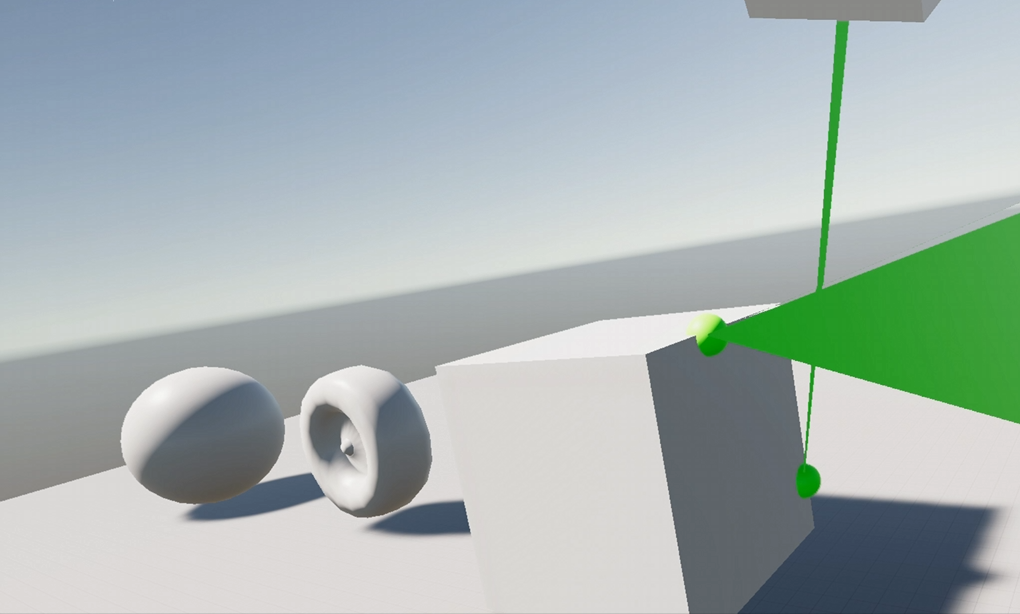

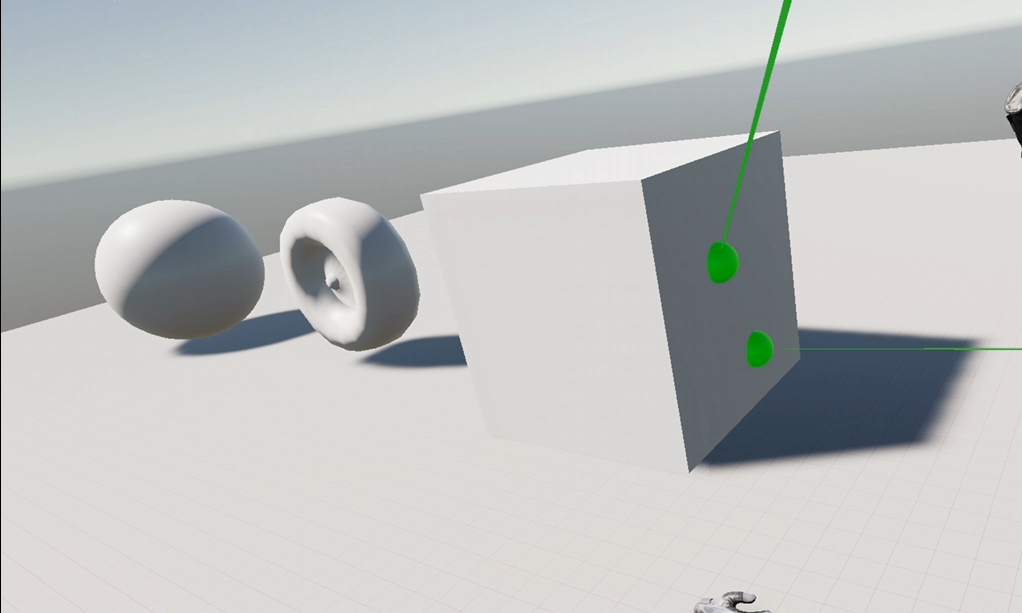

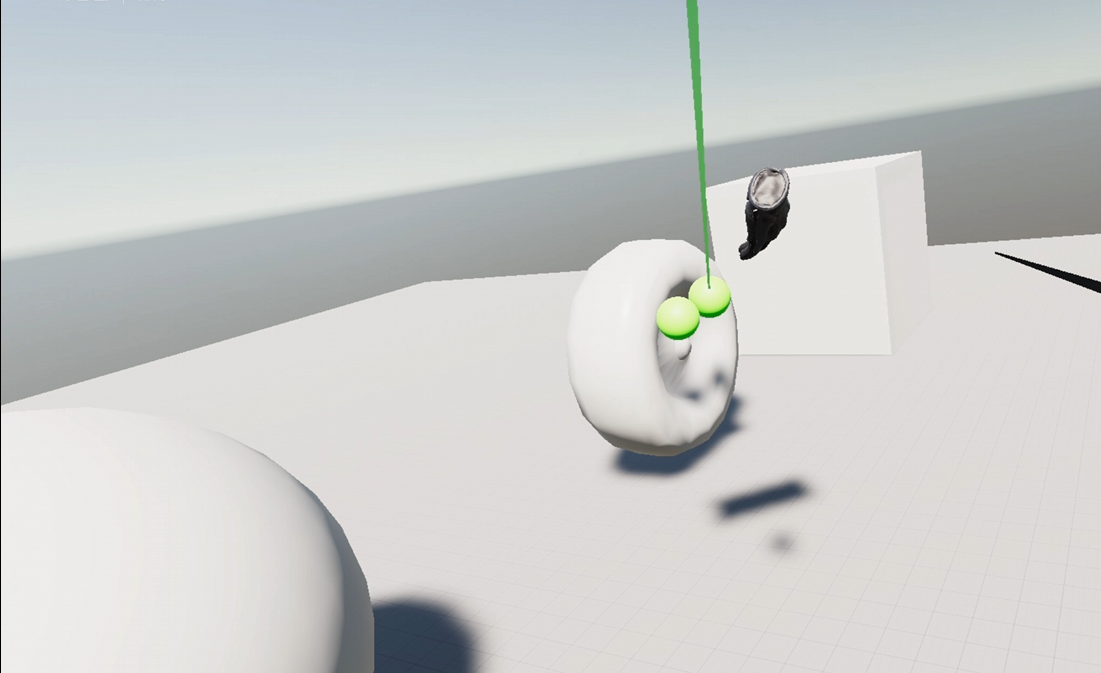

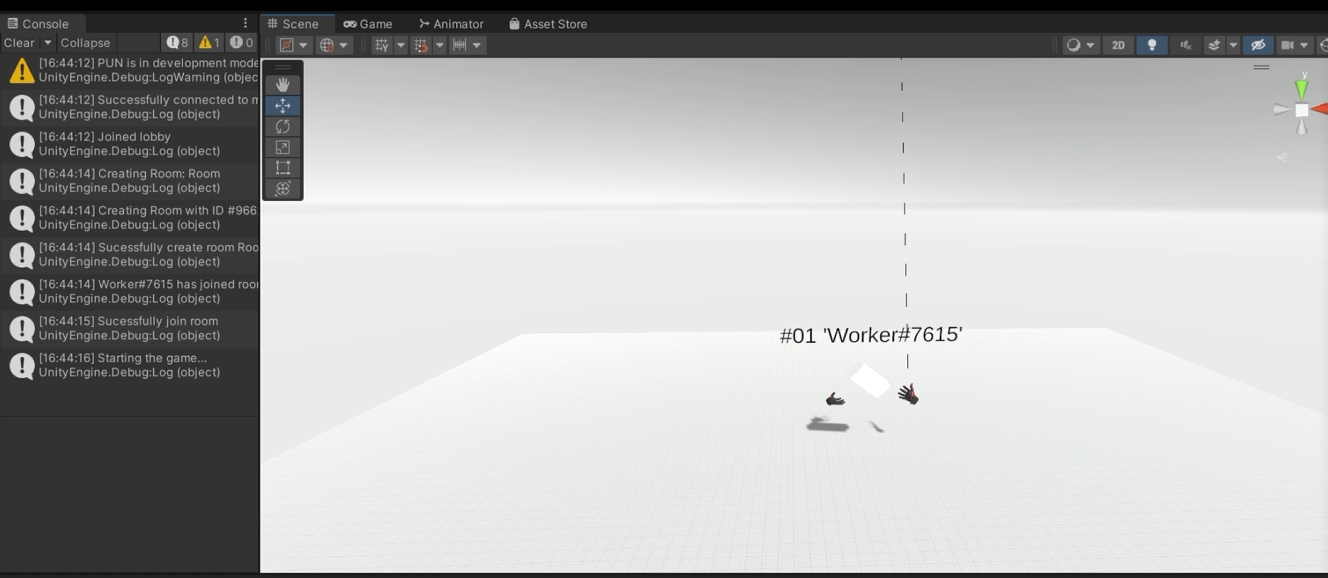

Prototype Demo 1 - Work Environment Showcase

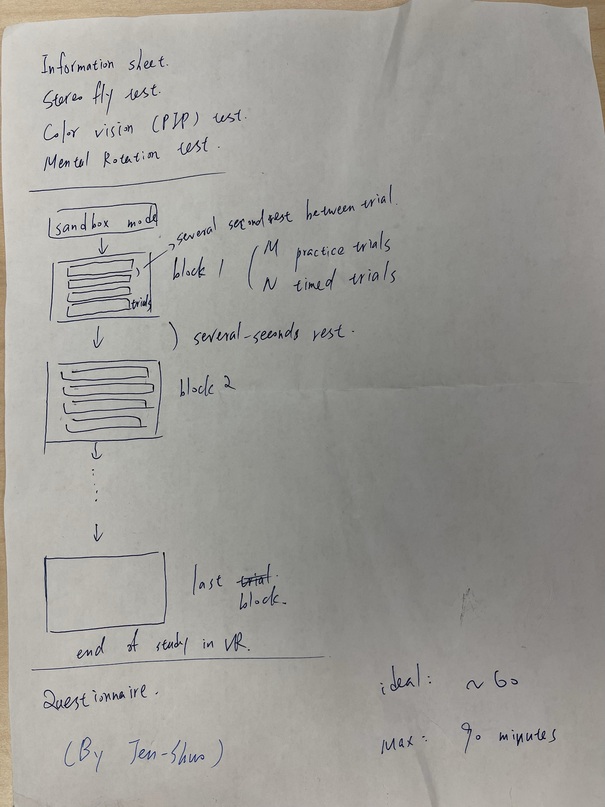

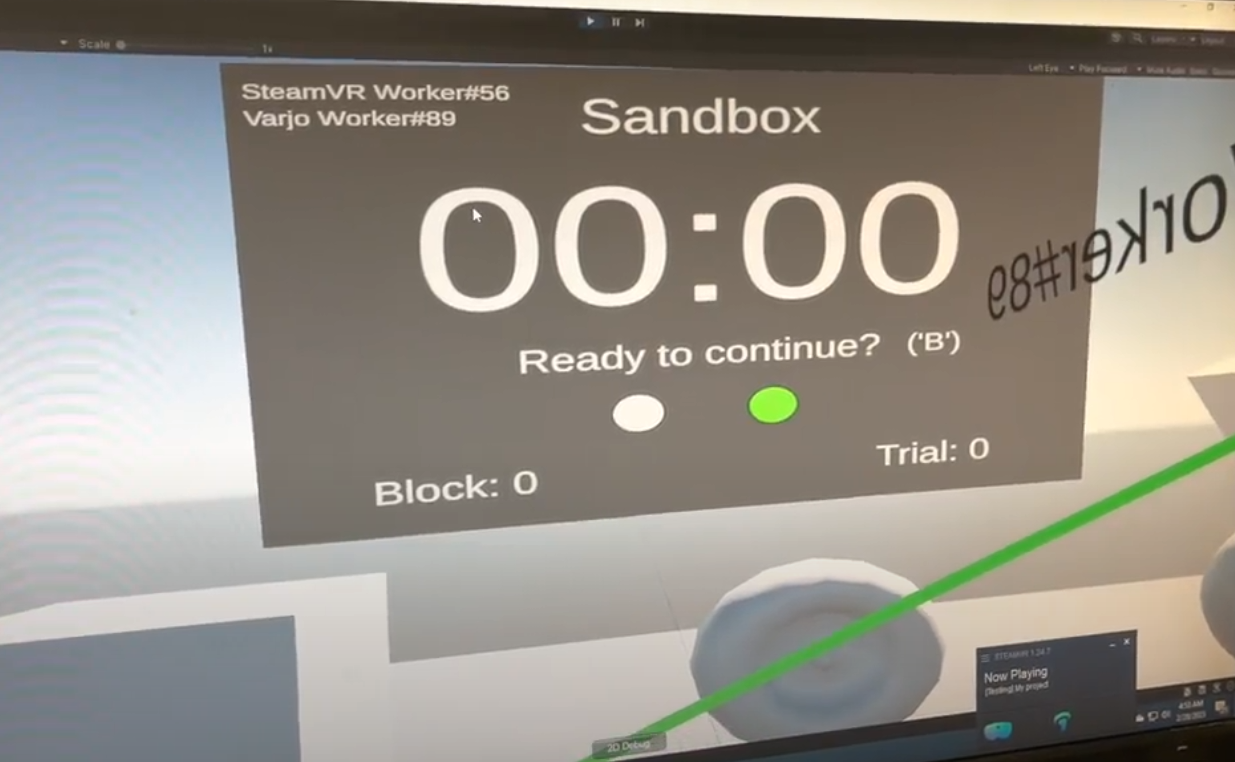

Prototype Demo 2 - Study Flow Management

My Major Roles & Contributions

- I work as a Research Assistant, HCI/XR/3DGUI Researcher, Designer, and Developer in the CGUI Lab.

- I iteratively design, develop, and prototype the VR + networking (multiplayer) system + Varjo and HP-Onmicept gaze tracking system in Unity based on the shifting requirements of each implementation.

- I contribute creative ideas and insights to the weekly research discussion.

- I communicate and collaborate with other folks in the lab actively.

- I iteratively design, develop, and prototype the VR + networking (multiplayer) system + Varjo and HP-Onmicept gaze tracking system in Unity based on the shifting requirements of each implementation.

- I contribute creative ideas and insights to the weekly research discussion.

- I communicate and collaborate with other folks in the lab actively.

Highlights

×

![]()