Episode 1:

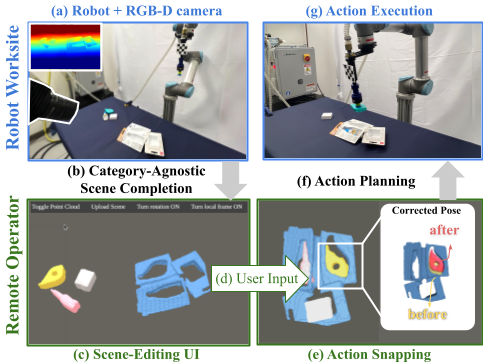

Traditionally, the study of robot teleoperation concentrated around action specification with robot-centric interfaces, which needs the users to control the actions of robots. However, this often requires high robotics expertise. Prof. Shuran and Steven led a project researching on an easier approach - "Scene Editing as Teleoperation" (SEaT), allowing users manipulate digital replicas of real-world objects to set task goals. Such framework transforms traditional robot-centric teleoperation to a scene-centric approach. This method uses algorithms to convert real-world spaces into virtual scenes and refine user inputs.

SEaT

Showcase

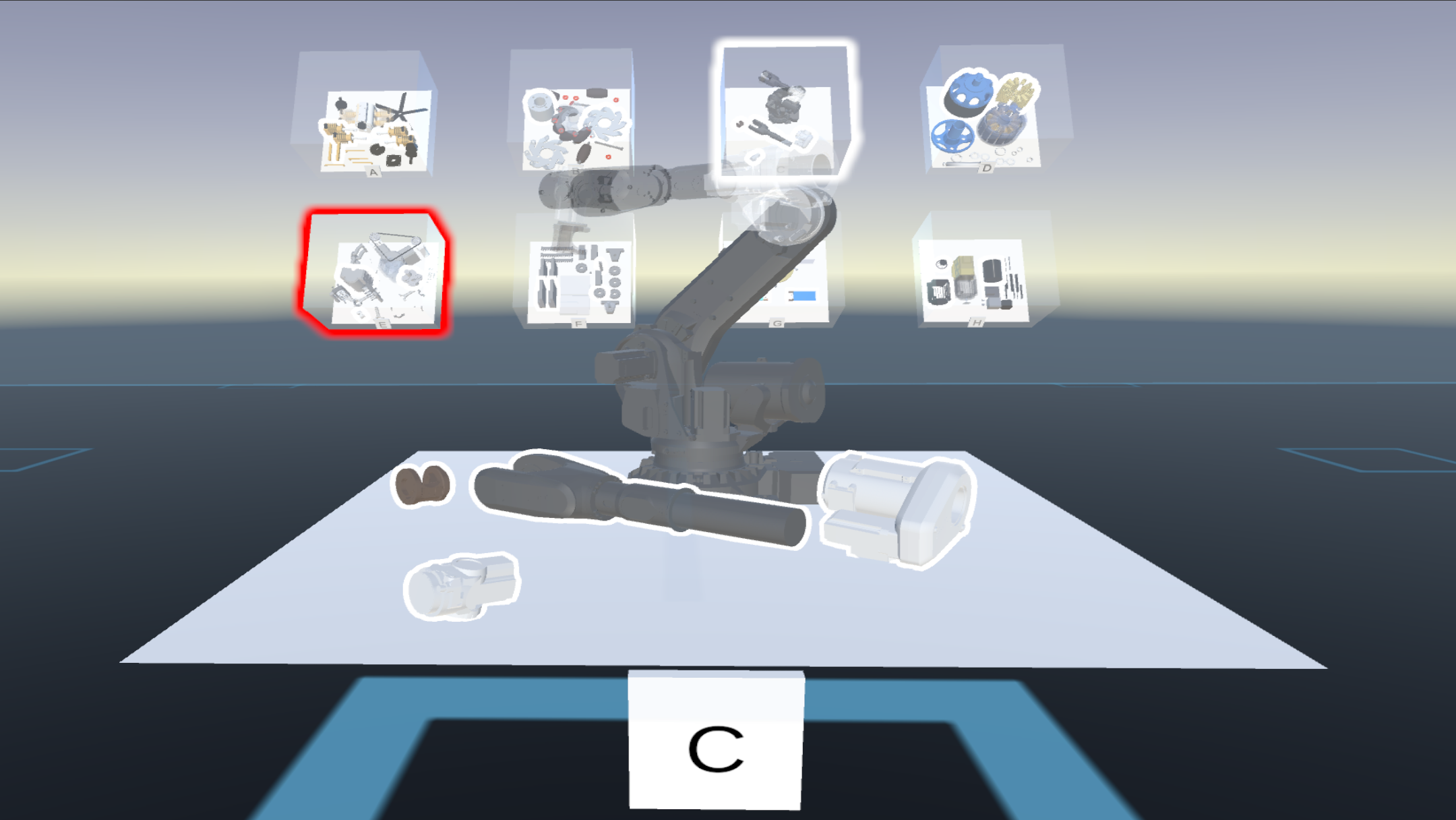

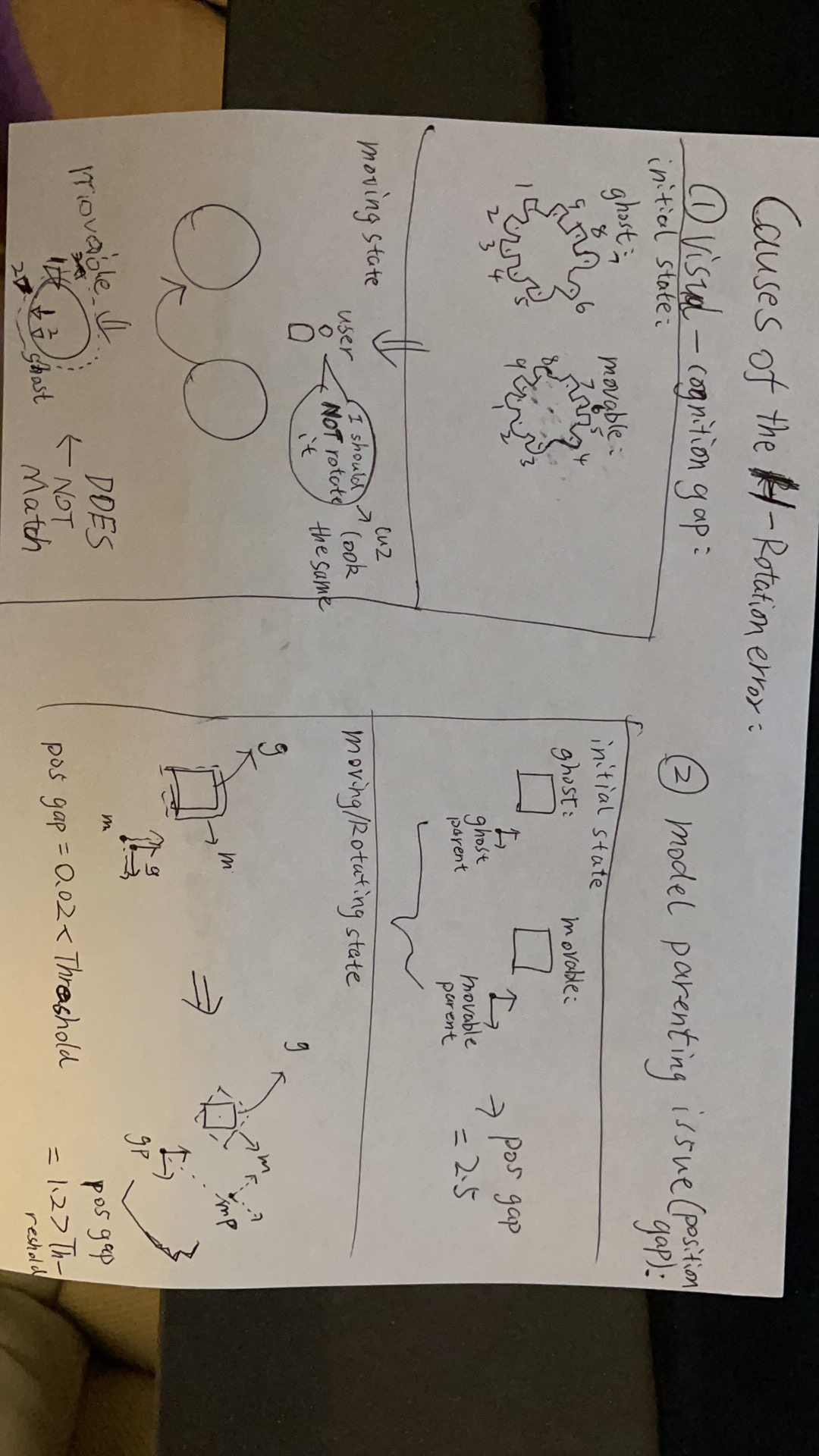

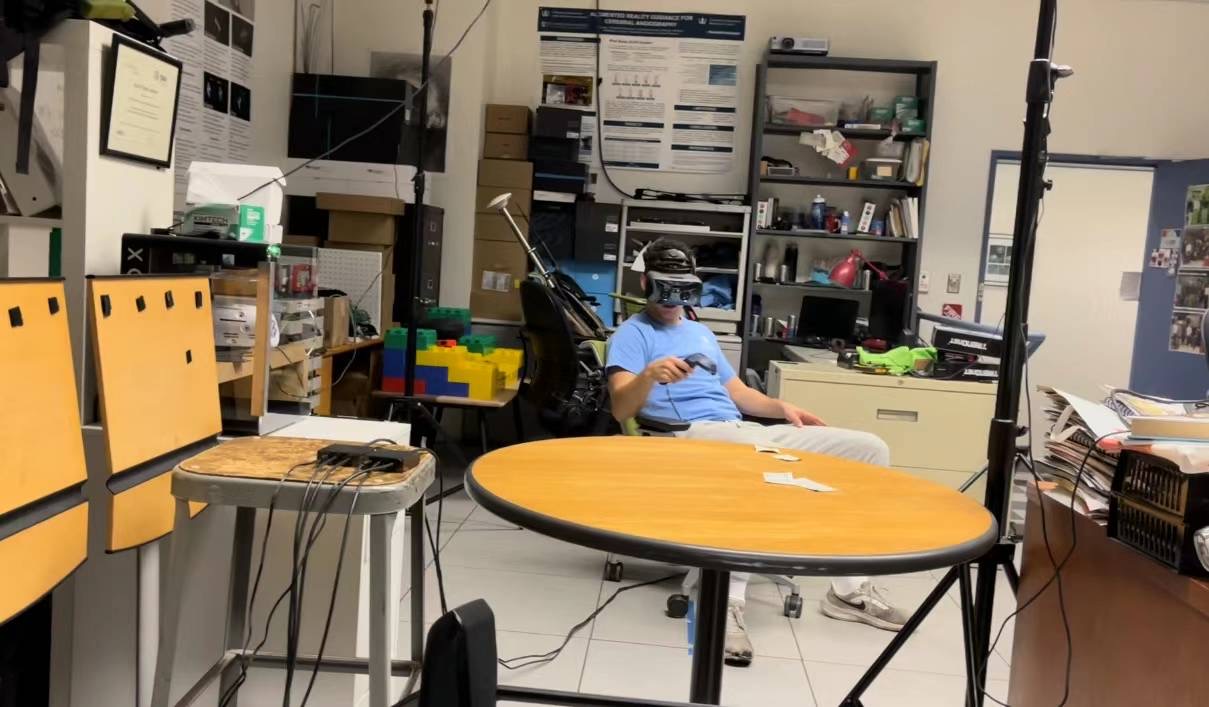

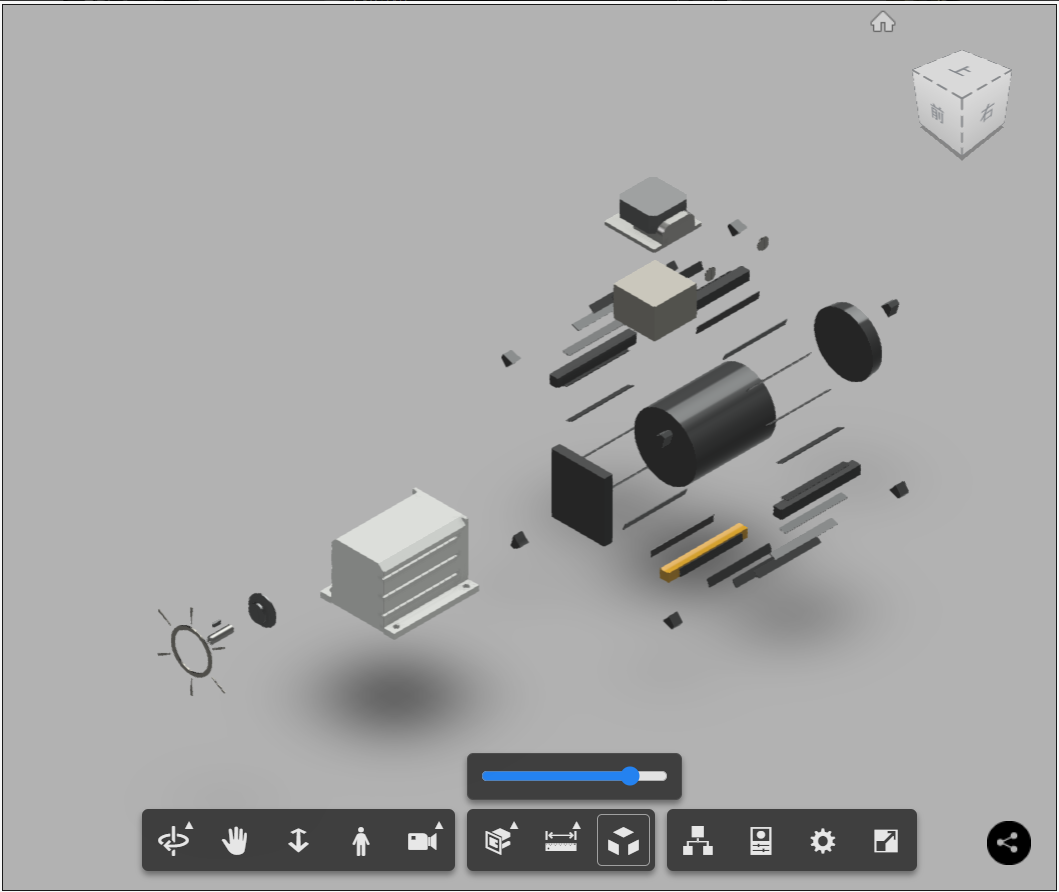

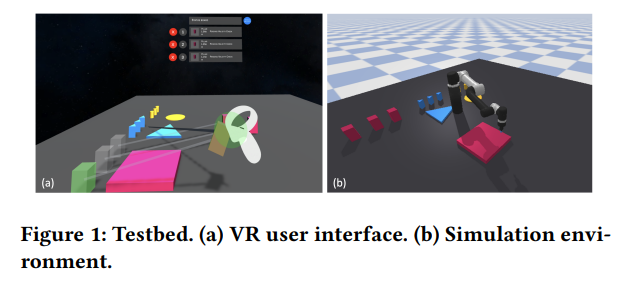

In the previous project, we mentioned "virtual scenes". To send a robot instructions in real-world requires the user to edit task objects in such a virtual scene - a traditional PC software with 2D UIs. However, imagine that if a user want to move and rotate an virtual replica then fit it into a case, he/she has to manipulate the object with a long series of mouse/keyboard inputs, along with frequent camera view adjustment (to better perceive scene environment and conditions), which left much space to be improved in conveniency, comfortability, and efficiency. To research a more user-friendly approach for the object manipulation, task assigning nad monitoring, we, folks in the CGUI Lab, led by prof. Steven, prompted an XR solution, which allows users to perceive the working environment with inituitiveness and manipulate objects directly by hands, suggesting much lower effort requirement to perform the teleoperation.

Imagine a scenario where not just one robot is under your command, but an entire complex system. What if, as a single user, you could supervise, manage, and teleoperate multiple robots simultaneously? This introduces a classic challenge in UI/UX design: managing multiple parallel tasks efficiently and effectively. Building on foundation of previous works, our current exploration dives deeper into the challenges of virtual reality (VR) teleoperation and remote task guidance. Specifically, we're intrigued by scenarios where a remote user assigns tasks to local technicians or robots across multiple sites.

In such scenarios, we raised new research directions and questions, such as:

- How to understand the nature of parallel task management?

- How to research and design a new 3D GUI system in VR/AR that can handle such multiple teleoperation tasks?

- What are the better layouts of GUIs that can increase efficiency and effectiveness? And Why?

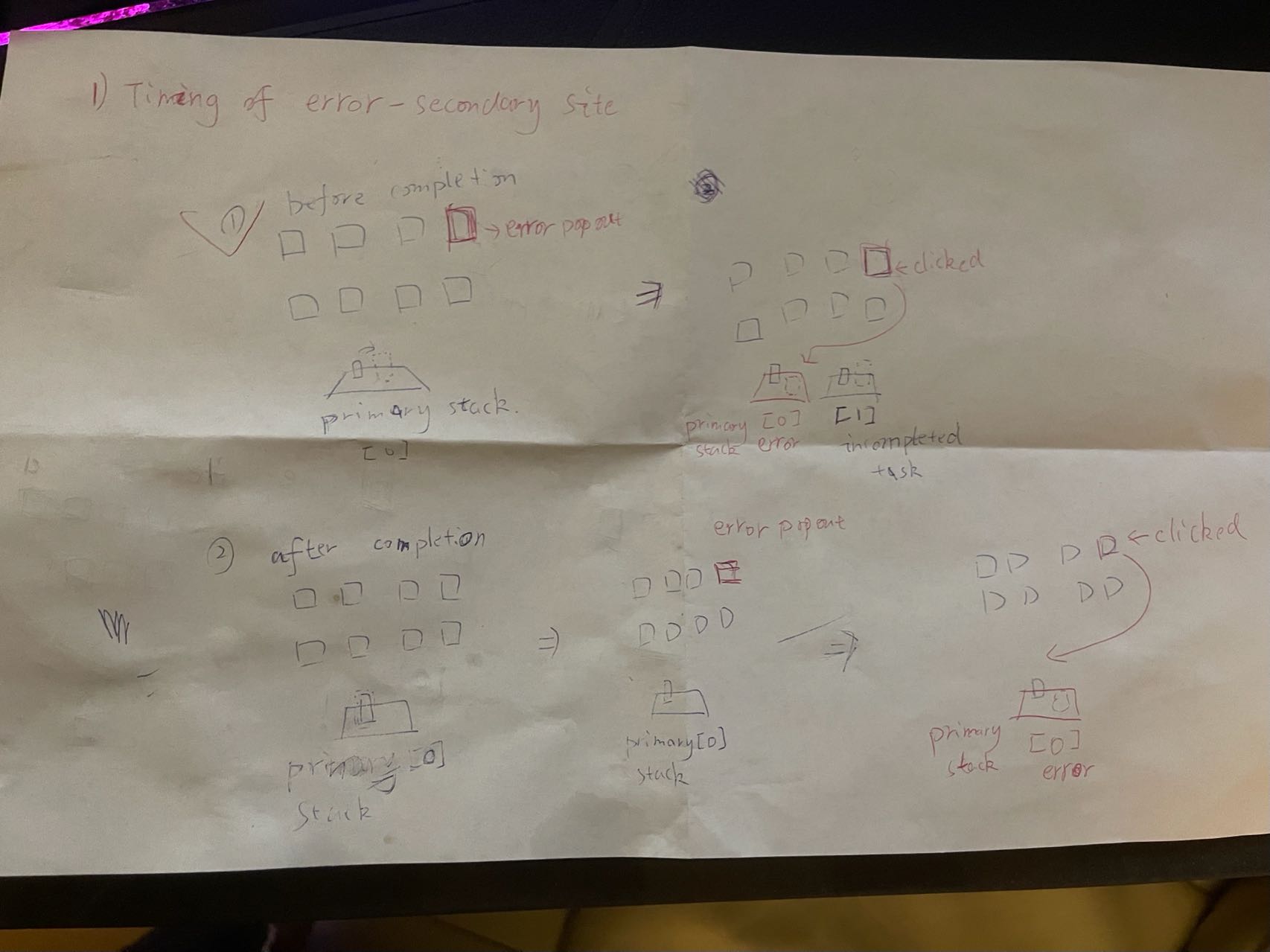

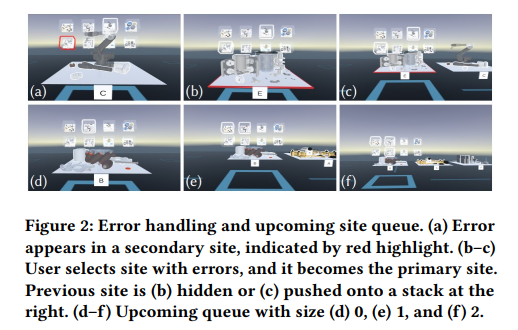

- How does the user handle interruptions during teleoperation (for eg. one robot catches an error, etc.)?

- How to help the user to smoothly transit from one task to another while maintaining the awareness of other sites?

......

Since this is an ongoing project, to the respect my and my partners' rights, I may not expose more insights.

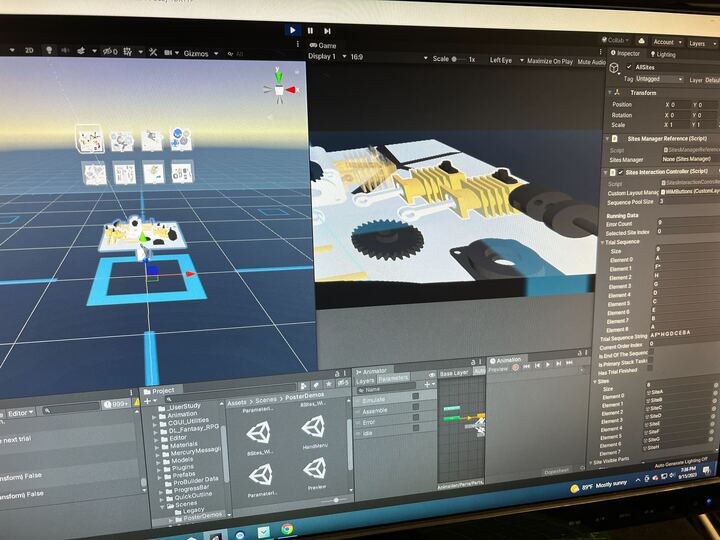

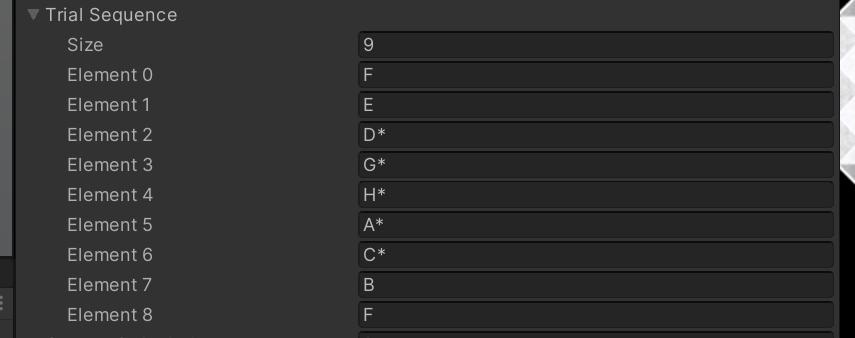

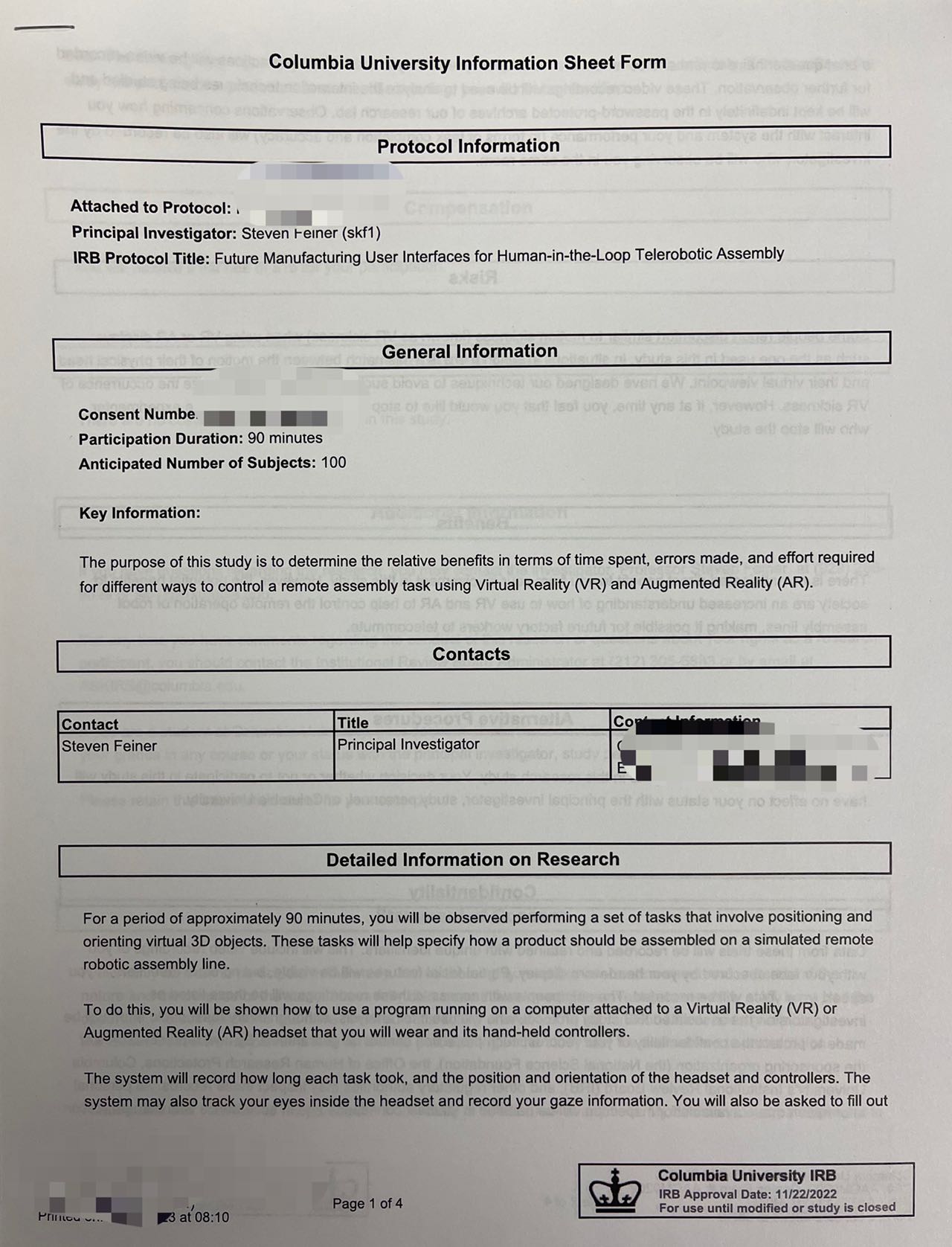

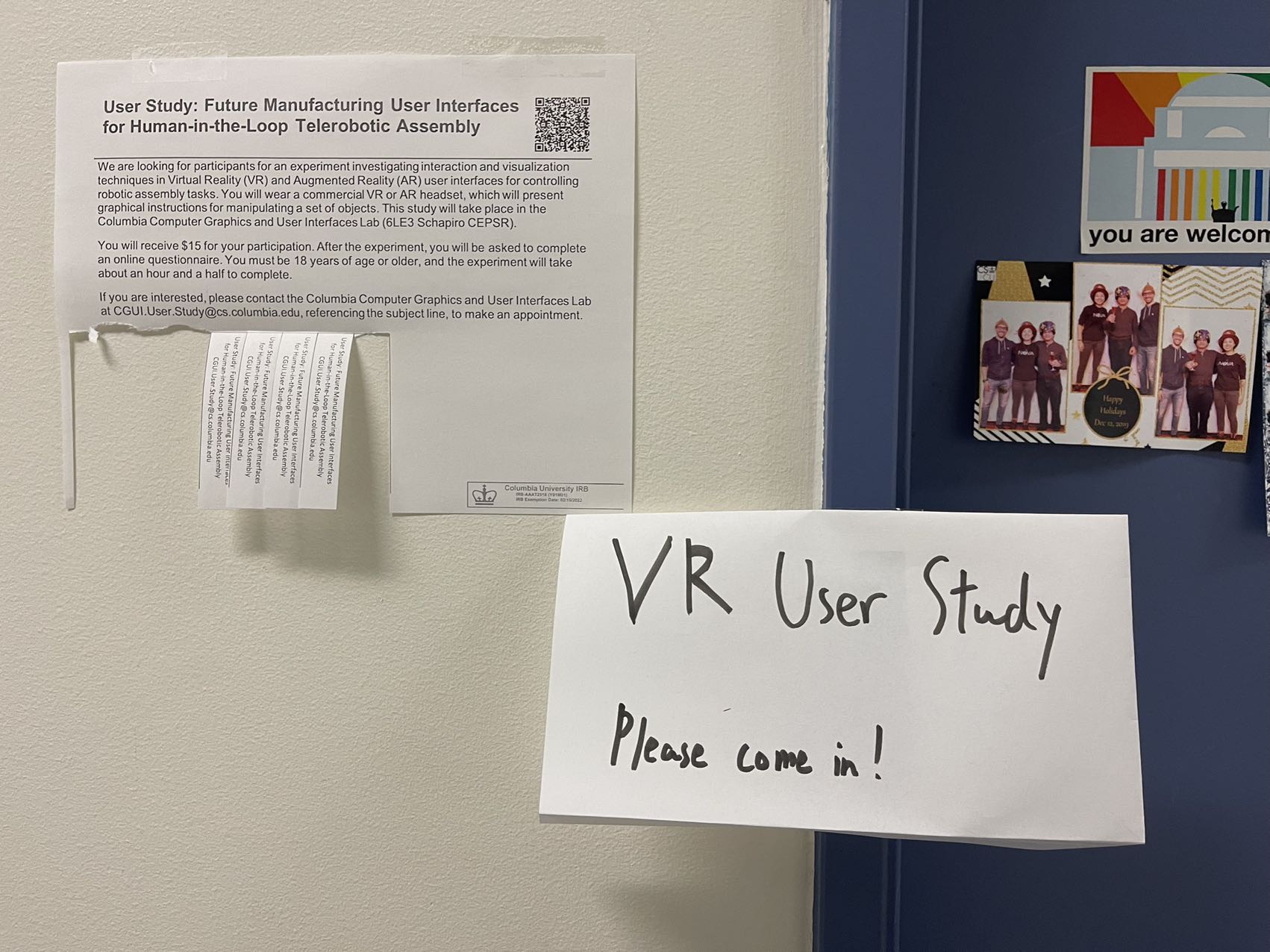

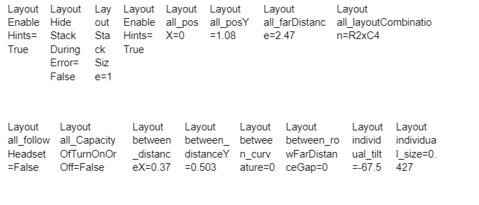

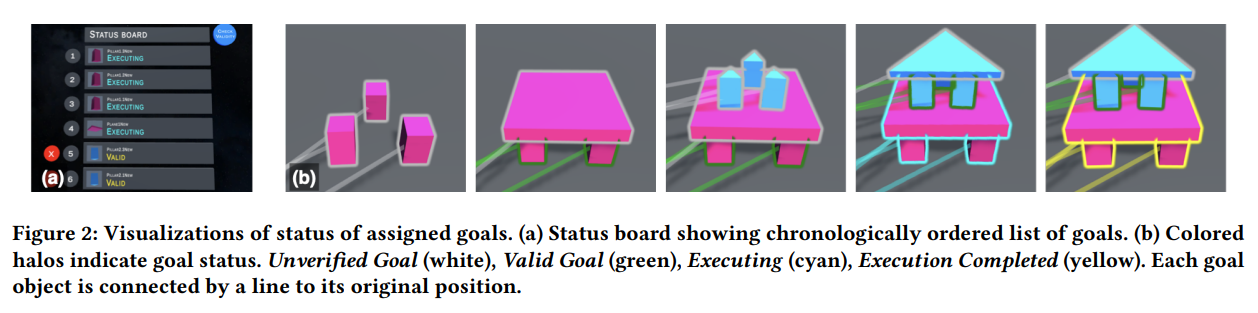

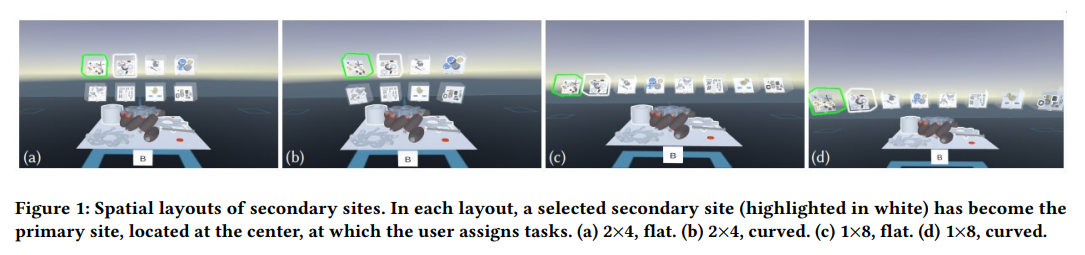

The questions I listed show part of the agenda of the project FMRG: Multi-Robot, or known as Multi-Agent, Multi-Site. In the discover of the answers and solutions to them, our recent work carried out a study, introducing an instrumented VR testbed to examine how different spatial layouts of site representations influence user performance. Furthermore, we delve into strategies to assist the remote user in addressing errors and interruptions from secondary sites and transitioning between them. Our pilot study sheds light on how these factors interplay with user performance.

For more details about the previous and current study, please check our paper. For the future study, I may not expose till the next publication.

Our recent work led to a publication accepted by ACM SUI 2023, A Testbed for Exploring Virtual Reality User Interfaces for Assigning Tasks to Agents at Multiple Sites. Our future efforts will dive deeper on the interruption handling and multi-task parallel management, targeting future conferences/journals such as VR, ISMAR, CHI, etc. based on our schedule and progress. As I mentioned, since this is an ongoing project, I cannot expose the details of our future works, please understand this.

A short poster demo of the multi-robot interfaces, ACM SUI 2023

- I iteratively design and develop the VR system in Unity based on the shifting requirements of each implementation.

- I lead multiple pilot and user studies, collect and interpret the data.

- I assist in study design, paper composing and publication process.

- I contribute creative ideas and insights to the weekly research discussion.

- I communicate and collaborate with other folks in the lab actively.